分享一个美女图片的爬虫

使用 python3 写的

安装前需要用 pip 安装几个依赖

pip3 install requests

pip3 install lxml爬虫默认会全站爬取,默认下载路径在 D 盘

import requests

from lxml import etree

import os

import threading

def dir_exist(dir_name):

if not os.path.exists(dir_name):

os.makedirs(dir_name)

def pa_one_page(url,base_dir):

res = requests.get(url)

tree = etree.HTML(res.text)

title = tree.xpath('//header/h1/a/text()')[0]

dir_exist(base_dir + title)

print('正在爬取'+title)

for i in tree.xpath('//article/p/img'):

pic_url = i.xpath('./@src')[0]

file_name = pic_url.split('/')[-1]

##print(pic_url,file_name)

full_file_name = base_dir + title + '/' + file_name

res = requests.get(pic_url)

with open(full_file_name,'wb') as f:

f.write(res.content)

print('正在爬取'+full_file_name)

def pa_all_page(url,base_dir,thread_counter):

res = requests.get(url)

tree = etree.HTML(res.text)

for i in tree.xpath('//header/h2/a'):

one_page_url = i.xpath('./@href')[0]

pa_one_page(one_page_url,base_dir)

thread_counter.release()

def all_page(url,base_url):

thread_counter = threading.Semaphore(10)

while 1:

res = requests.get(url)

tree = etree.HTML(res.text)

next_page = tree.xpath('//li[@class="next-page"]/a/@href')

if len(tree) >0:

next_url = next_page[0]

thread_counter.acquire() # 获取许可,控制线程数量

thread = threading.Thread(target=pa_all_page, args=(next_url, base_url,thread_counter))

thread.start()

##pa_all_page(next_url,base_url)

url = next_url

print(next_url)

else:

break

def main():

base_dir = 'D:/ 美女图片 /' ## 图片保存位置

all_page('https://www.xmkef.com/',base_dir)

if __name__ == '__main__':

main()

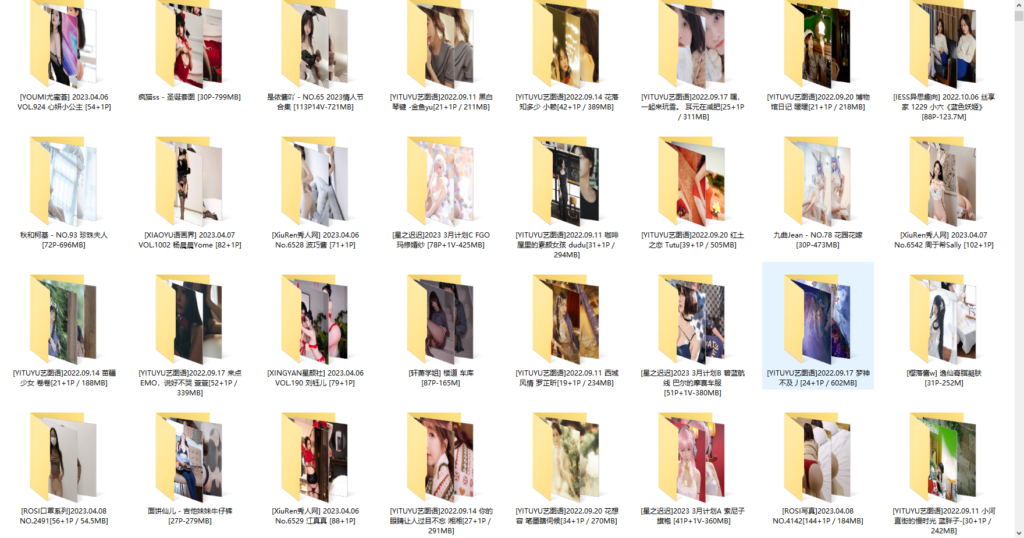

执行以上代码即可爬取美女图片

正文完